“Shakey” – 3D Feature Film

Director Kevin Cooper and Stereographer Tim Maupin

I recently completed work as stereographer on an independent feature film in Chicago called “Shakey”. This film was directed by Kevin Cooper, producer of “Secondhand Lions”. We used the Element Technica Quasar beam splitter rig and two Red One cameras.

http://www.imdb.com/title/tt1684225/

The film is a family comedy. The story is about a girl, her father and her dog leaving a small town in Ohio to move to the big city (Chicago) after the death of their wife/mother. There are problems immediately as the dog is not allowed in their new ritzy townhouse apartment and we meet a string of colorful characters that help or hinder along the way. The film is ultimately about the little girl’s need for a friend in the dog and the father’s new life needing to take off and the realization of those things happening together.

My technique and developmental steps on the film were largely as follows:

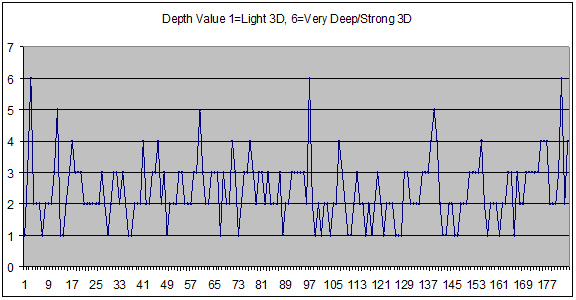

The first thing I did was to create a depth chart that plotted the amount of depth throughout the course of the film and scene to scene. I did this by simply assigning a number value of 0-6 for the amount of depth I wanted. This number does in fact correlate to a real measure, as I use 3% screen parallax as my maximum total parallax of positive and negative values. So I would simply take the depth value and cut it in half to arrive at the total percentage of screen parallax that was wanted for a particular scene. So a depth value of 3 would be a maximum of 1.5% in the positive and negative. I had several discussions with the director and DP about what we wanted to do thematically with the depth. I came up with three overall visions for the 3D.

One was to increase the depth over the entire course of the film slowly to elevate with them as they get more and more immersed in the city and the story so that we feel more and more immersed in the city and story as we go, almost as if we live there with these characters. This turned out to be a bit too conceptual perhaps for this genre/type of film we determined. It also might have been pretty impractical in terms of actual production.

The second was to make the 3D deeper and stronger when Shakey (the dog) and Chandler (the girl) were apart and have the 3D ‘softer’ or less deep when they or the family were together in frame or in a scene. This gets into a simple theory I will explain shortly. The third concept was to simply heighten the 3D with the suspense or action scenes- to sort of increase the 3D with the natural rises and falls of action or emotion. Our solution was to go with a mixture of both two and three.

So I mapped everything out with the depth chart and we also had key scenes or shots in which we wanted to do something specific with the 3D, such as a few fun moments (it is a kids film after all) where we would have things fly into the negative space or toward the audience. Some refer to this as the more gimmicky 3D, however I think in a kid’s film it is pretty safe territory. Imagine when you were young, how something coming out of the screen would in fact be very fun and memorable. In addition, if it’s done at a few key moments briefly and where it would make sense, such as a food fight, or when the dog shakes water on everyone, it seems a perfect use to me.

Here is the depth chart used on Shakey:

I strongly believe 3D is largely another tool of cinematography and can be used in creative and artistic ways to help tell the story or serve the emotion of a moment. 3D has opportunities to guide emotion or story just like using certain elements of 2D cinematography. Tried and true techniques such as using longer focal lengths to compress space or tighter frames to aid making the audience feel more claustrophobic if you have a scene where the character feels hopeless is an example of a conceptual technique you can do with 3D. As another example, something I suggested to try to in this respect was to keep Chandler and JT (father) distant from each other in 3D space during a fight they have. If you keep them spatially distant, there is now a real expanse in between them that the audience will have to re-converge on each in order to move back and forth, which can aid in the slight unsettling feeling of the scene. Of course, you want to be careful to still do it within reasonably safe parameters, but used with care this can be quite effective.

I used another technique during an emotional scene where they reconcile and have a “heart to heart’ talk. I began with them pushed behind the screen plane and very slowly and gently as the conversation goes on, I pulled the convergence and brought them to the screen plane and finally just barely in front in negative space. As the DP said, this is the ‘b’ in subtle!

My theory for a lot of these techniques is that because of the nature of convergence and how our eyes actually work, the most comfortable place to look in a sterographic image will be at the screen plane. This is also true because of the accommodation/ convergence difference with our eyes. This is the fact that in nature, as we look around the room at various objects at various distances from us, our eyes converge and focus on that same thing. In a stereographic image, this is not true and what is actually happening is we are converging on various things at different depths on screen due to the disparities between left and right images, while our eyes are still focused on the screen plane, as that is where the images are projected. It is amazing to me that this is actually possible. However, some people have a slightly harder time with this than others, and therefore it can be assumed that for this reason, it is probably most comfortable for the eyes to focus and converge on the same thing. At the least, we can say that it is subconsciously more like our natural experience, and therefore more familiar and comfortable. As such, it is safe to assume that there is a natural comfort in placing objects at the screen plane.

Overall, I attempted to keep the film pretty safe or with very comfortable 3D as this was my first feature, and I wanted be sure it was completely watchable by the largest amount of people. It seems to be a difficult line to walk as I think for 3D to be a viable long term medium it needs to be fairly safe and comfortable for the majority of viewers, but at the same time, if the film doesn’t feel ‘3D enough’ to some people, they may askwhy they are paying a higher ticket price. I personally think keeping the 3D largely softer’ or more subtle with big moments will have a greater chance of keeping people around this time. Make it strong here or there where it needs to be, but primarily it should be comfortable for the lowest common denominator. I started to develop an idea that perhaps a film could be shot almost 2D in some places and so it would be almost a ‘crossover’ film so to speak. I mean, if you think about it, why do we have to have really strong 3D right out of the gate? People are still getting used to it and so a film with subtle 3D mostly with the occasional moment or scene with ‘big 3D’ is perfect is really best for our situation currently. The brilliant people at pixar seem to have arrived at this conclusion…

Overall, I attempted to keep the film pretty safe or with very comfortable 3D as this was my first feature, and I wanted be sure it was completely watchable by the largest amount of people. It seems to be a difficult line to walk as I think for 3D to be a viable long term medium it needs to be fairly safe and comfortable for the majority of viewers, but at the same time, if the film doesn’t feel ‘3D enough’ to some people, they may askwhy they are paying a higher ticket price. I personally think keeping the 3D largely softer’ or more subtle with big moments will have a greater chance of keeping people around this time. Make it strong here or there where it needs to be, but primarily it should be comfortable for the lowest common denominator. I started to develop an idea that perhaps a film could be shot almost 2D in some places and so it would be almost a ‘crossover’ film so to speak. I mean, if you think about it, why do we have to have really strong 3D right out of the gate? People are still getting used to it and so a film with subtle 3D mostly with the occasional moment or scene with ‘big 3D’ is perfect is really best for our situation currently. The brilliant people at pixar seem to have arrived at this conclusion…

Continuing with my actual technique on the film, after the chart was made and we began production, I used two main techniques. The first was measuring distances and using a stereoscopic calculator to get the settings based on the parameters I selected. With this method I used a laser measuring device or occasionally a good old fashioned tape measure to get the distance from the sensor plane to the foreground object and then to the background object. Occasionally, your background object will be very distant and it is not possible to get an accurate measurement. In these cases I would measure the nearest thing that I could reach and estimate or simply set it to infinity. I use the IOD stereo calculator for iPhone.

It works very simply and after multiple tests on actual screens, its math holds up exactly to screen parallax offset percentages. For this film, I set up the lens settings with our lens package of Red Pro primes (9.5mm, 18mm, 25mm, 35mm, 50mm, and 85mm). I used the Red sensor setting, 64mm for maximum background divergence, and finally the large cinema’ screen setting of 60 feet as I was told they wanted a maximum screen size that the film could wind up getting, depending on distribution. I then set up our convergence wheel on our rig’s hand controller with convergence marks as the Quasar is programmed to keep the convergence, regardless of your IA. We did this by simply starting with a very solid alignment and then moved our chart incrementally further away and measured and then converged on it and marked this on the wheel. This made things much easier and held up for the entire show. We also made IA marks by the quarter inch, but this would occasionally change throughout the show, based on a more severe realignment. Iwould often check the IA on the rig and occasionally check the convergence by measuring, but these marks made things much quicker and were very accurate overall. Once I had my foreground and background set, I would choose the convergence based on the shot and my original chart/map. Since we had a very nice full 3D polarized JVC 40″ monitor on set, I was able to watch a rehearsal in very good 3D and get a feel for the scene and where the convergence should be. Once I had that I would set my marks if necessary and pull convergence or lA as necessary. Our 3D logger would then take down the IA and convergence, as well as any notes on known alignment problems. Within a week I also started adding a simple note to help me remember where the convergence was in relation to the screen plane to aid in our effort to keep depth continuity as close as possible. I would add an 5, P, or N, or combinations of 5 – P, for example, to illustrate one moving to the other. These of course stand for screen plane, positive and negative space, respectively. There were shots where it didn’t always break down this simply of course, but most often it was very helpful.

We made a great effort to control the depth continuity as much as possible to keep the depth similar from shot to shot and to try to avoid depth jump cuts, or when a cut goes from something with stronger positive parallax to negative. To do this, the script supervisor would take down the 3D logger’s notes. I would then confer back with the script supervisor every morning with our daily shot/scene list to match settings based on previous days. Obviously, it is impossible to plan for or to be able to know exactly the way a cut will go in the edit, but it seems best to try and get as close as you can on set to leave less work in post. Many depth cutting issues can be adjusted in post through re-converging by horizontal image translation and active depth cuts.

Active depth cuts are an animated re-convergence that takes a few frames and begins to converge towards a middle point at the cut on the outgoing footage and picks up with that convergence on the incoming footage and continues to the final convergence within a few more frames. All in all it is a very quick ‘sliding’ of one convergence to another within a cut that isn’t perceived by the audience.

Once I had input my foreground and background distances, I could input my selected convergence and IOD would calculate the correct IA setting. At this point, I didn’t really know the math behind it, and it seemed to be very accurate based on my other method, so I trusted it. It has only been in the last month that I have developed a rough understanding of the various schools of thought on the complex math behind by reading Lenny Lipton’s out of print 1982 book. The farthest point isn’t always the background per se as sometimes it is a very small portion of the screen. Or in cases of an anticipated quick shot in which no one will really have time to look at the background, you can often set it at something closer, and thereby allowing a stronger 3D setting.

Overall I would say I had an average IA of roughly around ½ inch. I found myself around the ¼ inch mark for a lot of shots, but would get up near ¾ and 1 inch occasionally with a maximum IA of 3 inches for a few wide shots. I would often converge on the actors or just in front of them to give the film a bit more of a window look’ as I called it. This put most of the action happening behind the screen and makes it appear more a window into the film world.

A topic that is often brought up is the ongoing debate over whether all films should be 3D, or if there are certain films that don’t need 3D and some films that are best for it. This is a controversial topic as we obviously still watch black and white and maybe on great occasions a silent film, so clearly even if 3D does completely become the dominant format, 2D films will still be made. I can see the argument that certain films won’t benefit much if at all from 3D. It is hard to imagine that a film would be more humorous if it were in 3D. There are instances with visual humor or gags that 3D could certainly help, but on the whole a comedy film would probably remain just as funny (or not funny) in 2D or 3D. It is very similar to a comedy in color vs, black and white. Very little would change for the joke -as long as you could still see the action or character, the joke would likely work The only thing that might change or affect this is the color or 3D making it feel more real, and therefore may change the reaction slightly based on this psychology. This applies to other genres as well and it becomes more of a situational characteristic. I think there are obvious fits for 3D, mostly films that have a strong sense of physical place that tie in with the story.

Although this is often disagreed upon, I personally feel as though 3D can be used in drama to great success. To me, it ties in naturally with the cinematography to define a sense of physical space and ultimately the mood and emotion of a film. I think by also coming closer to ‘inserting’ one’s sense of existing into the film world, it is more possible for them to feel more as the characters do. I think it can also be used in clever ways to achieve a certain feeling within an audience as well. Drama is where I’m more of an author in the film world and this is the area that I am most interested to explore in 3D. My other fascination with 3D is that it is still very much an uncharted ocean of possibility. I liken it to a pure white field of untouched snow.

Getting back to my actual methodology, the other technique that I used in conjunction with the measure and calculate procedure was going off of screen parallax percentages. I would first measure the horizontal width of the screen that we were using. It is necessary to measure the actual viewable width, not the full width of the TV. Next, simply multiply that by 1%, 2% and 3% to get the actual percentages of screen width. You then use a ruler to measure and mark a small clear plastic card with the 1%, 2%, and 3% marks and you now have a great tool to measure actual screen parallaxes. This is where the depth chart would boil down to an actual useable numerical value. If I had a scene that I assigned the depth value of 4 to, I would simply divide that number in half and that would be my maximum on screen percentage that I would allow as a total of foreground and background. So if my background was 5% and my foreground was 1.5%, then I was right at the proper depth. The drawback to this method is that you need a fairly decent size on set viewing monitor or TV and your cameras need to be in good alignment to begin with. If they are horizontally off at zero (or when they are brought to zero IA), then your measure of horizontal will obviously be slightly off.

In terms of alignment, I worked with two great rig techs that would go the extra mile to ensure a good alignment of the cameras. Initially, we took great pains and halted the set occasionally to get a good alignment. As we traveled on, we began to realize that with modern post correction tools, it becomes a fine balance of knowing when an alignment is bad enough to warrant a halt in production and when getting the shots in the can is more important. Personally, I would say it is important to get it as close as you can within a small amount of time, and not waste too much precious production time. If there is a major misalignment, it is most likely worth it to take some time to fix it, but most minor offsets can now be fixed rather easily with great post software and additionally the human vision system is capable of dealing with a minor amount of offset. Over the course of time, minor offsets will wear on a viewer, but a few shots or a scene won’t be as noticeable if kept few and far between. The only other caveat to this is the use of the above described method of checking screen parallax. For this, it is very important to ensure at least a good horizontal alignment.